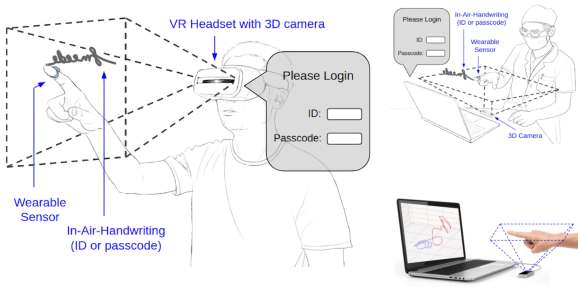

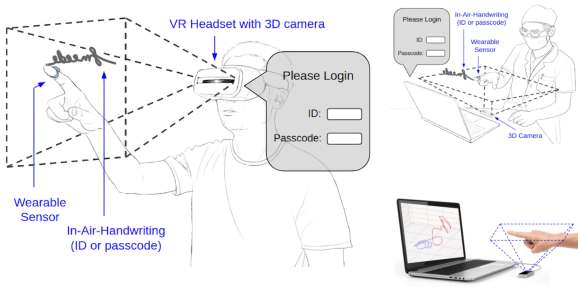

While the headset manufacturers mixed reality with each passing year, gradually moving closer to merging the real and digital worlds, when users see and hear them, input — ability to interact in the VR or AR environments — remains a challenge, as the controllers are still required for most interactions. Last week researchers from the University of Arizona demonstrated a potential alternative called FMKit, allowing headsets to accurately track the movement of individual fingers, as well as handwriting recognition in the air.

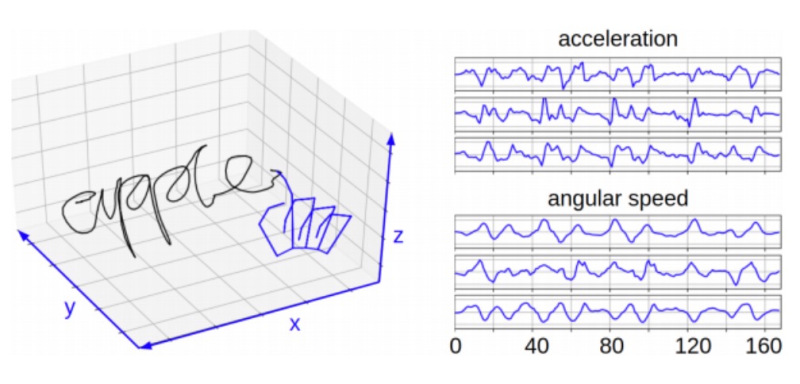

The work of the University goes beyond hand tracking observed in the accessories Leap Motion and Oculus headsets Quest, allowing you to record the way individual finger in three-dimensional space and compare it with four datasets of handwriting samples. A letter with your finger tip can be used to identify individual users, secure user authentication by password and create a text input as an alternative input, conversation or the choice of words by the manual controller.

In addition to the value of the system as a way of becoming written in the ether of words in the text — the function, which focused researchers, potential business applications. In the air to draw a distinctive signature to unlock protected headset XR or individually protected application that allows companies to personalize the protection of digital content. On the other hand, companies can allow teams to use a common password system that goes beyond just numbers or letters, recognizing characters such as, for example, a five-pointed star or other distinctive signs.

Currently FMKit supports two types of input devices: Leap Motion controller and a special glove for the measurement data of inertia with Python modules for collecting, pre-processing and visualization of scanned signals. How the system identify the user FMKit reaches the accuracy of more than 93% using Leap Motion and nearly 96% accuracy with the glove. But for handwriting recognition the results of the Leap Motion is better and the system in the best case identificeret exactly at 87.4% of cases. It’s not enough to replace the voice input dictation, but this is a good start for the system, which can only be used with a sensor mounted on the finger and the head.

The researchers placed FMKit on GitHub as open source project code, including the library and the datasets, in the hope that other researchers will expand their work. The authors have presented their research at the workshop, CVPR 2020 on computer vision for augmented and virtual reality.

Source