Researchers from Google have developed the first end-to-end video system is 6DoF, which can even broadcast through Internet connection with high bandwidth.

Current video 360 can take you to exotic places, and events, and you can look around, but really you can’t move your head forward or backward position. It makes the whole world feel trapped in your head that really is not the same thing as to be somewhere.

New Google encapsulates the entire stack of the video; the capture, reconstruction, compression and rendering, providing an important result.

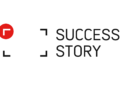

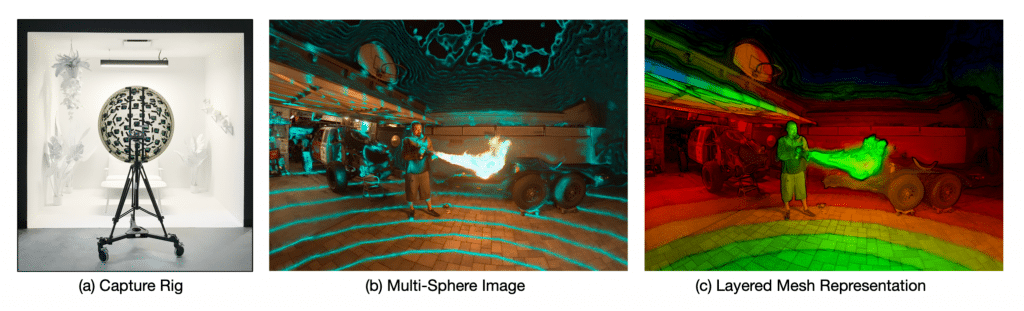

The device is equipped with synchronized 46 4K cameras working at 30 frames per second. Each camera attached to the “cheap” acrylic dome. Because the acrylic is translucent, it can even be used as a viewfinder.

Each camera used has a retail price of $ 160, which is slightly above the $ 7,000 for the installation. The total cost may seem high, but actually it is much cheaper than custom-made alternatives. 6DoF video is a new technology that is just beginning to become viable.

The result is a 220-degree “light box” with a width of 70 cm — that is how much you move your head. The resulting resolution is 10 pixels per degree, which means that it probably will look a bit blurry on any modern headset, except the original HTC Vive. As with all technology, it will improve over time.

But what’s really impressive is the compression and rendering. Video light fields can be transmitted through Internet connection with a speed of 300 Mbps. This is still far beyond the average Internet speed, but most major cities now offer such bandwidth.

How does it work?

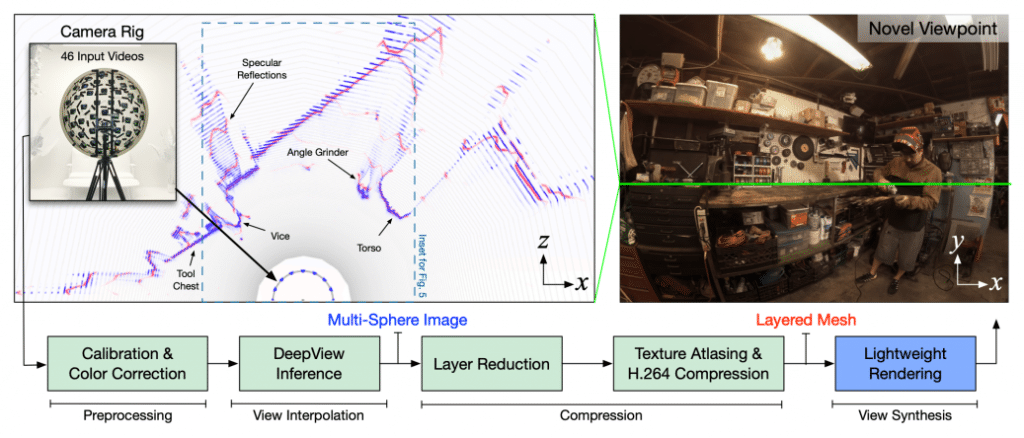

In 2019, the researchers of artificial intelligence Google developed a machine learning algorithm called DeepView. If you enter 4 images of the same scene from slightly different points of view DeepView can create a depth map and even generate new images from arbitrary viewpoints.

This is a new video system with 6 degrees of freedom, uses a modified version of DeepView. Instead of a view of the scene through a 2D plane, the algorithm uses a set of spherical shells. The new algorithm processes this output to a much smaller number of shells.

Finally, these spherical layers is converted into a much more easy “layered mesh”, which is taken from the texture Atlas to further save resources (this technique is used in game engines where the textures for the different models are stored in a single file, closely Packed together.)

You can read the research article and try some samples in your browser on a public page of Google for the project .

Light field video is still a developing technology in its early stages, so don’t expect that YouTube will start to support video light fields in the near future. But it seems that one of the “Holy Grail” VR-content — video streaming 6DoF, now is a solvable problem.

Source