With eye movement and foveal rendering only the image area VR, which at the moment looks the user, calculated at full resolution. It saves a lot of computing power, which can lead to higher image resolution or more sophisticated graphical effects. Taiwanese researchers are now making another step forward in this direction.

Scientists from the National Taiwan University have developed an AI that plays the foveal region in a much higher resolution and with more fps without excessive computational work. The result is a more clear and calm image, which should benefit even VR glasses that have displays with low resolution.

Additional images and higher resolution in the foveal region are calculated by artificial intelligence, so they are synthetic. Higher refresh rate decreases to the edges of the foveal region. The result is a more smooth transition to the standard refresh rate.

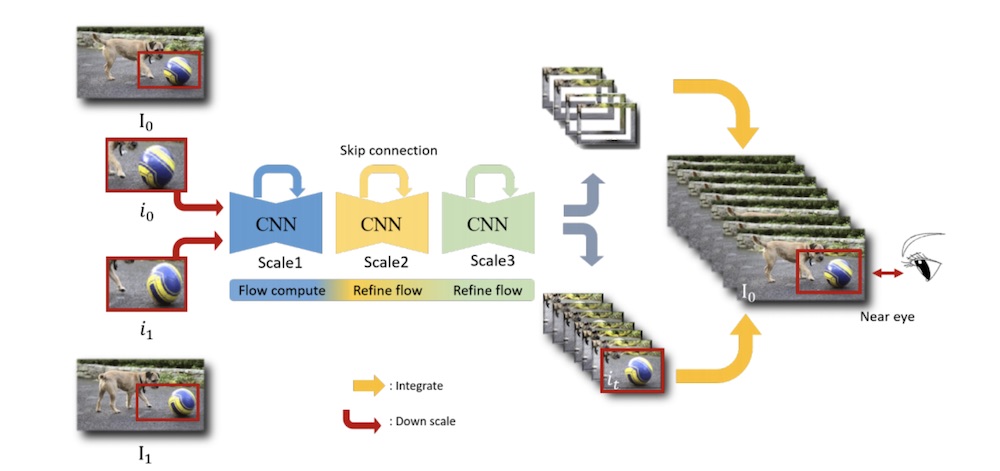

Here’s how a synthetic increase in the frequency of updates in the foveal region: AI takes two sequential fragment of the foveal images (i0 and i1) from the graph and calculates additional intermediate image (it), including framing with graduated frequencies of updates. In the end, all three components are connected.

Foveal rendering is not yet ready for the market

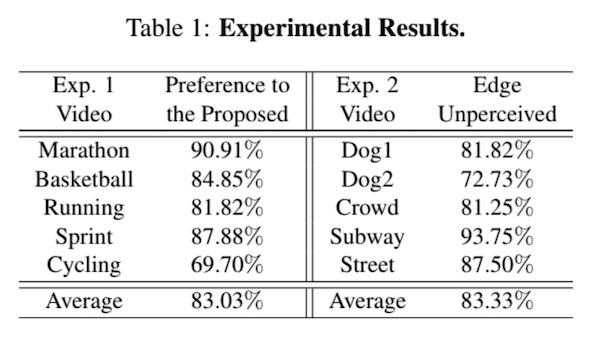

The research team checked the improvement of the image of AI in the experiment with the Playstation VR. On average, 83% of study participants noticed an improvement in image without visible edges.

For the experiment, we used ten videos, and a fixed area of the image without eye tracking. Possible cause: foveal rendering requires extremely fast and accurate detection of the eye, and relevant technology does not always work properly.

Anyway, improving the AI will not be in virtual reality glasses tomorrow: variable frequency of updates within the image will probably have to first create the adapted rendering pipelines or even displays.

In their science paper, the researchers do not reveal how they were able to simulate different frequencies of updates. The results of the study were presented at the current conference CVPR 2020.

Source