Did you know that the World Economic Forum estimates that over the next five years 7 million jobs will be lost due to digital transformation? One sector at high risk of being automated through Artificial Intelligence is services, including translation and interpreting. But are machines really capable of capturing the context as a human and translating it into another language?

If you enter the page will robots take your Job you will see that the probability that AI will take care of your job in the future as a translator, is almost 40%. Although it is not as high a probability as bus drivers may be, it makes clear that in the not too distant future it will be a machine that will take care of translations between languages.

There is already a wide range of Widgets and Gadgets that are dedicated to that essential task in a globalized world. We have Widgets like Google Translate or new Gadgets like masks that translate into several languages, but do they really offer us the same quality as a translation done by a person? One issue that becomes increasingly important when we evaluate the potential problems that the use of AI can generate, is bias. And, above all, in this case, gender bias.

What is gender bias and how does it manifest itself in machine translation?

The RAE defines bias as a ” systematic error that can be made when sampling or testing selects or favors some responses over others.”This means that bias is the inclination towards one thing, person or group versus the rejection of another, due to our cultural learning. Gender bias, therefore, is attributing something to someone because of their gender. To say that women do not know how to drive would be a gender bias, which also occurs in reference to certain professions. Professions such as judge or doctor were traditionally more closely linked to men, while care work was mostly attributed to women.

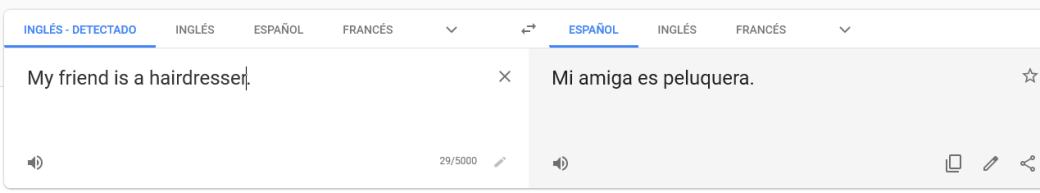

Although today we have made great progress in the parity and equality of women, it is striking that technology, which should improve the lives of humans and offer an advanced vision of the world, reproduces what we are already overcoming in analog life. With some Widgets like Google Translate for example, we can see that it continues to base translations on the analysis of raw data, without paying attention to removing gender biases.

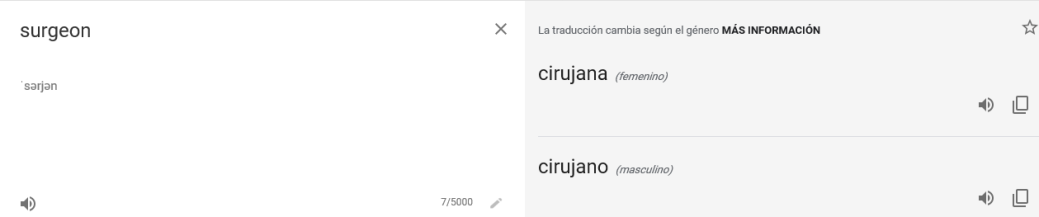

We see in this type of machine translation how it still leans towards certain stereotypes. In the case of the labor field, we observed a tendency to assign professions that in the past had been more associated with the female gender in their translation. Although most automatic translators have already improved quite a bit in this respect, we still come across biased examples like the ones you see in the image.

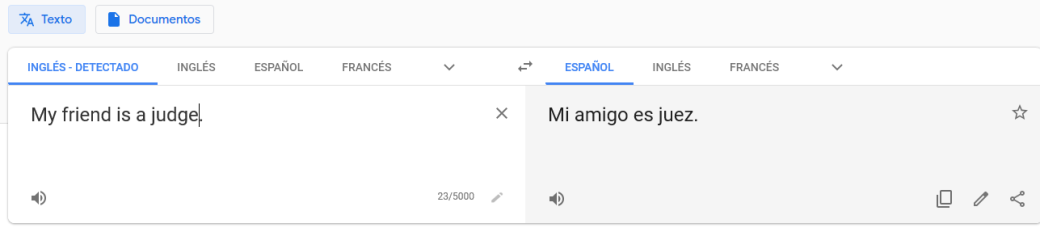

The same goes for professions traditionally more associated with men such as the role of judge.

How can gender bias be addressed?

Although we see that the problem of gender bias in general is not yet solved, we do see advances in the use of AI in computer-assisted translation. At least, when we translate isolated terms without context, we observe a vision without bias, indicating both possibilities. In addition, it informs us that the translation changes according to gender. This kind of choice should offer us AI translation in all cases to free us from bias.

Another solution is the increase of professional women in the digital field. According to the UN report, only 12% of AI researchers and 6% of software developers are women, which is a driver of the sexist behavior of virtual assistants.

In the end, programmers feed the AI with data of their choice. So it turns out that models are only as good as the data they receive. It is not the same, if the programmers are mostly men or women, since they also share their vision of the world, and the biases that this entails. With a vision that takes into account a representation of all groups, categories and collectives, we can create a more neutral framework in the data with which we feed Artificial Intelligence. Therefore, it is of the utmost importance that companies bet on models without bias and that are based on a strong ethical component, not only in reference to computer-assisted translation, but as a global bet to train AI.

Aura, an example of Artificial Intelligence that demonstrates the effort in ethics and bias

Telefónica’s AI, Aura, is putting a lot of attention and effort into making it an inclusive and diverse AI platform. The team works constantly to identify the major challenges it faces and launches regular research. This is how the industry faces them, how they are perceived by society in different countries and the technological advances that can help achieve a platform without biases.

These challenges range from the tone of voice of the assistant in each of the answers offered, through an understanding of language or equal voice recognition between sexes and ages, to the modeling of data to obtain insights. Therefore, Aura is an artificial being that has no gender, nor does it eat, drink or feel. Aura does not transmit to the client something that it is not, nor does it reproduce human behaviors that continue to influence that type of bias.

From the Aura platform there is a lot of influence so that all developers who build on Aura comply with these principles. It’s not always easy though. Therefore, periodic audits are carried out to help correct possible deviations in that tone of voice. It is important to insist on an Artificial Intelligence without bias and based on respecting ethics, in order to achieve an egalitarian future both in artificial language and in conversations between people.