American engineers have created a special attachment for VR glasses, which is inserted into the smartphone, allowing to determine the position of the body and lips of the user, as well as its appearance. The nozzle consists of two mirrored half-spheres installed in front of the camera of the smartphone, and allowing it to act as a simple camera depth. Development was presented at the UIST conference 2019.

Many modern virtual reality systems are equipped with sensors for motion capture, which allows more realistic to portray the user in a virtual world and use their hands to interact with virtual objects. Usually such a system consists of multiple cameras on the front of your helmet aimed in different directions. However, in addition to the full VR helmets have a cardboard vr glasses that work thanks to plug-in their smartphone that displays the image of the virtual world on the screen.

Since most modern smartphones are equipped with relatively high-quality cameras, engineers at the University of Carnegie Mellon, under the leadership of Robert Xiao (Robert Xiao) proposed to use the integrated camera to track the body movements of the user. Vr glasses smartphone is placed so that its camera is directed away from the user. It is not possible to use them to capture body movements. The authors solved this problem using two mirrored hemispheres in front of the camera.

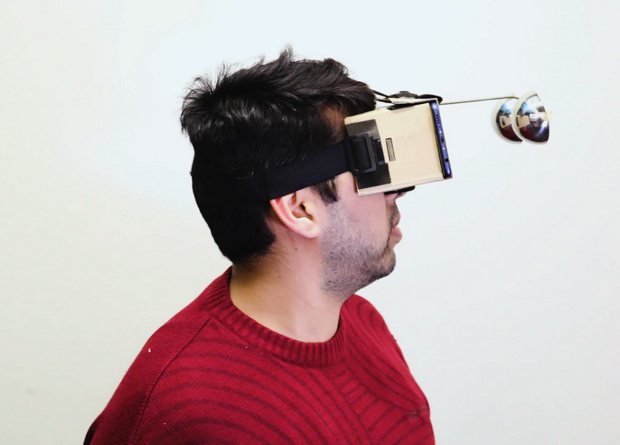

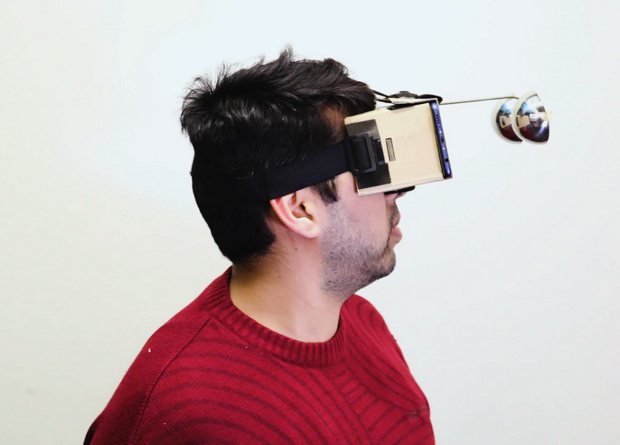

The prototype, developed by engineers, consists of a conventional cardboard virtual reality glasses (during development they used different models, including Google Cardboard), and wire is fixed at its ends two hemispheres with a mirror finish. This form mirrors the camera of the smartphone is capable of capturing a substantial portion of the surrounding space, including the entire front part of the human body. A pair of hemispheres instead of one need to ensure that the algorithms could be of two photos taken from different angles, depth data on the frame.

After the photo is taken it is sent from the smartphone to the server. There is first there is an expansion of spherical images in rectangular, which is possible thanks to data on distance from the camera to the scope, angle shooting, and calibration. After that, the rectangular image is analyzed by the algorithm OpenPose that marks on the body key points that are compliant with the joints and other parts. To obtain data on the shape of the lips using a separate neural network is able to distinguish five configurations.

The authors propose to use such a system to recognize gestures of the user and allow him to control virtual objects, and applications with the hands. In addition, they showed another use: they taught the algorithm to create data camera virtual avatar, painted in the same way as clothing of the user.

Source