![]()

Facebook Reality Labs has published a detailed study of the method of creating hyper-realistic virtual avatars in real time, detailing previous work that the company calls “Codec Avatars”.

Facebook Reality Labs has created a system that can animate virtual avatars in real time with unprecedented accuracy thanks to its compact hardware. With just three standard cameras in the headset that capture the user’s eyes and mouth, the system is able to more accurately display the nuances of complex facial gestures of a particular person than previous methods.

The point of the study is not only to attach the cameras to the headset, but also to the “technical magic” underlying the use of incoming images for a virtual representation of the user.

The solution relies heavily on machine learning and computer vision. “Our system works in real time and with a wide range of expressions, including puffed cheeks, lip biting, tongue movement, and details such as wrinkles that are difficult to accurately reproduce with previous methods,” says one of the authors.

Facebook Reality Labs has published a technical video summary of the work for SIGGRAPH 2019:

The group has also published its full research paper, which delves even deeper into the system’s methodology. Work titled“Face animation using Virtual Reality using Multiview Image Translation” was published in ACM Transactions on Graphics. The authors of the article are Shi-En Wei, Jason Saragih, Thomas Simon, Adam W. Harley, Steven Lombardi, Michal Perdock, Alexander Hyips, Dawei Wang, Hernan Badino, Yazer Sheikh.

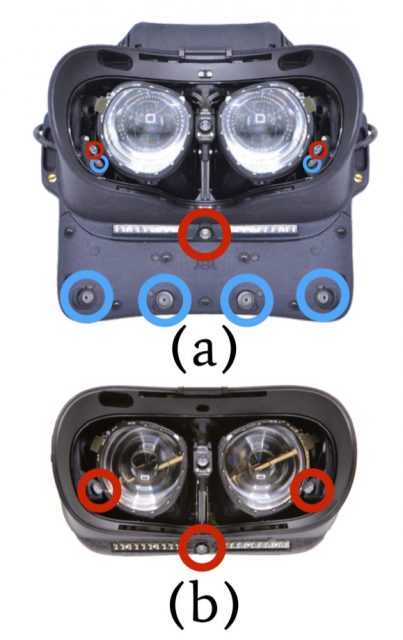

(a) A Training headset with nine cameras. (b) Tracking headset with three cameras; the camera positions used for the training headset are circled in red.

(a) A Training headset with nine cameras. (b) Tracking headset with three cameras; the camera positions used for the training headset are circled in red.

The document explains how the project involved the creation of two separate experimental headsets, the “Training” headset and the “Tracking”headset.

The training headset has a higher volume and uses nine cameras that allow it to capture a wider range of views of the subject’s face and eyes. This makes it easier to find a “match” between the input images and the user’s previously captured digital scan (deciding which parts of the input images represent which parts of the avatar). The document states that this process is “automatically detected by self-translating multi-view images, which does not require manual annotations or unambiguous matching between domains.”

Once the match is established, you can use a more compact “Tracking”headset. The alignment of the three cameras reflects three of the nine cameras on the Training headset. The views of these three cameras are better understood thanks to the data collected from the Training headset, which allows the input to accurately control the avatar animation.

The paper focuses on the accuracy of the system. The previous methods produce a realistic result, but the accuracy of the user’s actual face compared to the representation is impaired in key areas, especially with extreme expressions and the relationship between what the eyes do and what the mouth does.

![]()

As impressive as this approach is, it still faces serious challenges to the development of the technology. Relying on both a detailed pre – scan of the user and the initial need to use the “Training” headset will require something similar to the” scan centers ” where users can go to scan and train their avatar. Until VR becomes a significant socializing part of society, it is unlikely that such centers will be viable. However, advanced sensing technologies and continuous improvements in building automatic correspondence at the peak of this work may eventually lead to the viability of this process in the home. The question remains: when will this happen…?