The creation of an algorithm capable of tracking hand movements could become a system for translating sign language in real time.

One of the possibilities of Artificial Intelligence goes through automatic voice and text translations. It is true that the accuracy, although it improves with the passage of time, does not yet reach a professional level. But on a communicative level, and in certain languages, this technology solves needs well.

Some languages are more difficult than others, of course. Translating from English to French or Spanish is relatively straightforward for a machine translator. But doing it from Arabic or Chinese to English is significantly more complicated. Y with sign language the task twists even more.

There are some initiatives aimed at recognizing sign language, but they usually require high-powered equipment. From Google’s AI lab have created, however, a system capable of generate an accurate map of your hand and your fingers only with a smartphone.

The system is based on machine learning to capture the movements of the hand. The smartphone camera and the power of the phone itself they are enough to detect even the gestures of several hands. This is not easy at all, because in the movement the fingers collide with each other, they are placed again and they do everything very fast.

Comprehensive training

Just as people need to study to learn a language, the system developed within Google to translate sign language needs training. In order to optimize its operation, it has been chosen to recognize the palm, on the one hand. From there fingers are analyzed separately.

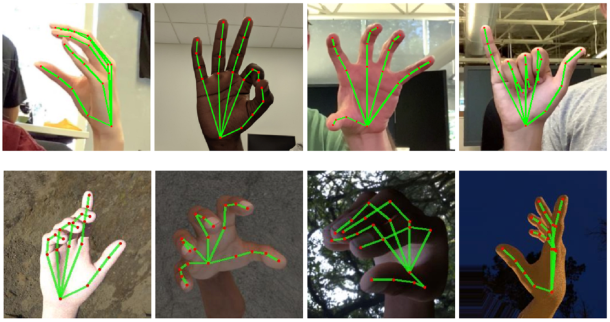

Another algorithm is fixed on the image and assigned 21 coordinates. They are points that have to do with the positions, the distances of the knuckles and the tips of the fingers.

To make this happen it took extensive training. Researcher manually added these 21 coordinates to 30,000 hand imageswith different signs and different light. This thoroughly amassed database is what feeds the machine learning system.

This is used to determine the position of the hand. From there, this is compared to a database of known gestures. The result is encouraging. The algorithms have yet to be polished, but they are a promise of improvement of existing systems for translating sign language. The most interesting thing: the low need for resources. Just a smartphone and your camera.

Images: daveynin, Google